Communication and sharing promote growth

Joining Hands for Development!

In the history of computing architecture evolution, certain moments do not arrive silently. The launch of NVIDIA's GB200 NVL72 is one such moment—it is not merely a new product, but a complete reconceptualization of the server itself.

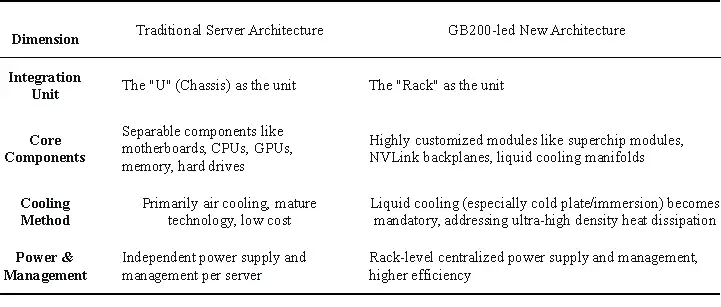

For three decades, a "server" has consistently meant a standardized chassis unit: containing a motherboard, CPU, memory, storage drives, and expansion cards, interconnected via industry-standard interfaces. We measure it in "U"s, rack and stack it in cabinets, and connect to it over networks. However, the emergence of the GB200 NVL72 is fundamentally dissolving this long-standing definition at its core.

Its essence is no longer "a server," but rather a "computer" that takes the form of an entire rack. Traditional server components are deconstructed and then reintegrated on a grander scale—via NVLink-C2C, liquid cooling, and rack-scale power management—into an indivisible computational whole. This is no simple upgrade; it is a profound paradigm shift.

1- What is a "Server"? The Answer to This Question is Changing

For three decades, our understanding of a server has been a standardized chassis: inside resides a motherboard, CPU, memory, storage drives, and expansion cards, all adhering to industry standards, allowing for arbitrary replacement and upgrade.

But the change brought by the GB200 is fundamental:

· The computational heart is no longer discrete CPUs and GPUs, but the GB200 Superchip—a holistic entity deeply integrating a CPU and a GPU.

· Interconnect no longer relies on standard PCIe slots, but on customized NVLink backplanes.

· Cooling is no longer an optional accessory, but an integrated liquid cooling system.

· The fundamental unit of deployment has shifted from the "chassis" to the "rack."

The various components of the traditional server have been "torn apart," only to be reassembled and integrated anew at the larger scale of the rack. This is not evolution; it is re-architecture.

Table 1 - Reshaping the Physical Architecture: From "Chassis" to "Rack"

2- The Three Pillars of "Rack-Scale Architecture"

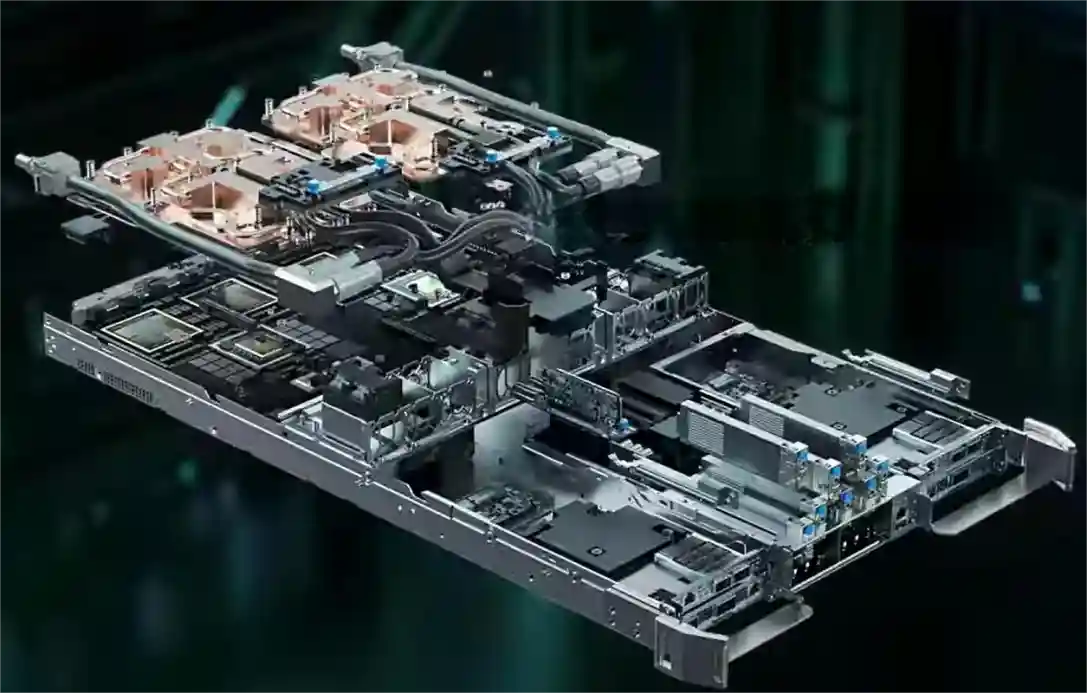

Traditional server design involves balancing trade-offs within a sealed chassis. In contrast, the rack-scale architecture represented by the GB200 approaches cooling, interconnect, power, and management as a complete system, designed holistically at a top level in a new dimension. This is no longer about stacking components, but about deep, system-level integration. This relies on the synergistic innovation of three core pillars working together.

Pillar One: From "Network Interconnect" to "Backplane Bus"

In traditional data centers, servers are independent nodes communicating over a network (like Ethernet). Within the GB200 NVL72, however, the NVLink Fabric functionally replaces the traditional motherboard bus, becoming the "skeleton" connecting all computing units. This shift enables the 72 GPUs in the rack to work in concert like a single, massive GPU, achieving orders-of-magnitude improvements in communication bandwidth and latency.

Pillar Two: Cooling Evolves from "Supporting Facility" to "Core Subsystem"

When computational density progresses from tens of kilowatts per rack to hundreds of kilowatts, traditional air cooling becomes ineffective. Liquid cooling is no longer an optional "supporting facility"; it has become a core subsystem on par with computing and interconnect. Its design directly determines the overall system's performance output and operational stability, making it the key factor in transitioning from "usable" to "high-performance."

Pillar Three: The "Centralization and Re-architecting" of Management and Power

The GB200 adopts rack-level centralized power supply and management. This is not merely about pursuing higher power conversion efficiency, but also a redefinition of system coupling. It brings benefits like simplified cabling and unified management visibility. However, it also expands the potential failure domain from a single server to the entire rack, placing new paradigm-level demands on operational maintenance.

3- The Restructuring and Shift of the Value Chain

The influence of the "rack-scale architecture" represented by the GB200 extends far beyond technology itself, clearly outlining a new industry value chain curve. Traditional value distribution is being disrupted, while new high-value strongholds are quietly forming around system-level integration and hardware-software co-design.

Server Vendors: Strategic Transformation from 'Definers' to 'Integrators'

Traditional server giants, like Dell and HPE, are facing a migration of their core value. The capabilities they once thrived on—motherboard design, system optimization, and standardized manufacturing—are seeing their importance diminish within highly customized, pre-integrated systems like the GB200.

However, new strategic opportunities lie within this crisis:

· Value Moving Upstream: The competitive focus shifts from internal server design to rack-level liquid cooling, power efficiency, and structural layout.

· Value Extending Outward: Core competitiveness extends from hardware manufacturing to professional services for large-scale deployment, cross-platform operational management, and integration capabilities with enterprise IT environments.

This signifies that the role of server vendors is transforming from being "definers" of standard products to becoming "advanced integrators and enablers" of complex systems.

Cloud Vendors' 'Strategic Procurement': Balancing Dependency and Autonomy

For hyperscale cloud vendors, the GB200 is both a strategic necessity and a strategic warning.

· Short-term Tactic: As the ultimate benchmark for computing power, procuring GB200 is an inevitable choice to meet market demand for top-tier AI compute.

· Long-term Strategy: To mitigate supply chain risks and technology lock-in, developing in-house AI chips (like TPU, Trainium, Inferentia) has become a core strategy crucial for future autonomy.

The actions of cloud vendors vividly reflect the complex trade-offs between efficiency and autonomy, and between short-term market needs and long-term control.

The Evolution of End-User Decision-Making: From Evaluating 'Components' to Assessing 'Output'

For end-user technical decision-makers (CTOs, Technical VPs), the procurement evaluation paradigm is undergoing a fundamental shift.

Traditional Procurement Checklist:

· CPU core count and clock speed

· GPU model and quantity

· Memory and storage capacity and speed

Current Strategic Considerations:

· Efficiency Metrics: Performance per watt, total model training time

· Total Cost of Ownership (TCO): Comprehensive cost including hardware, energy consumption, maintenance, and manpower

· Business Agility: Time cycle from deployment to productive output

This shift marks a critical evolution in corporate technology procurement from a cost-center mindset to a productivity-investment mindset.

The GB200 has redefined the compute unit—from the "server" to the "rack." This is not merely a performance upgrade, but a complete paradigm shift in architecture. The pursuit of efficiency has transcended mere component stacking, and the industry value chain is being restructured. In this transformation, the only certainty is this: adapt to it, or be left behind.

A new computational epoch has begun.

We will regularly update you on technologies and information related to thermal design and lightweighting, sharing them for your reference. Thank you for your attention to Walmate.